Justice Education Society: Using AI to scale family law assistance from business hours to 24/7

Jan 12, 2026

The problem

JES operates a human-staffed “Ask JES” service that provides high-quality legal information, but it is restricted to limited business hours and human capacity. Families in crisis often need immediate answers late at night or on weekends, but the existing system left them waiting until the next business day.

The challenge

Family law is high-stakes and nuanced; a generic chatbot that hallucinates legal concepts could cause real-world harm. The hard part was building a system that could answer complex legal questions accurately while strictly refusing to answer if verified data wasn't available.

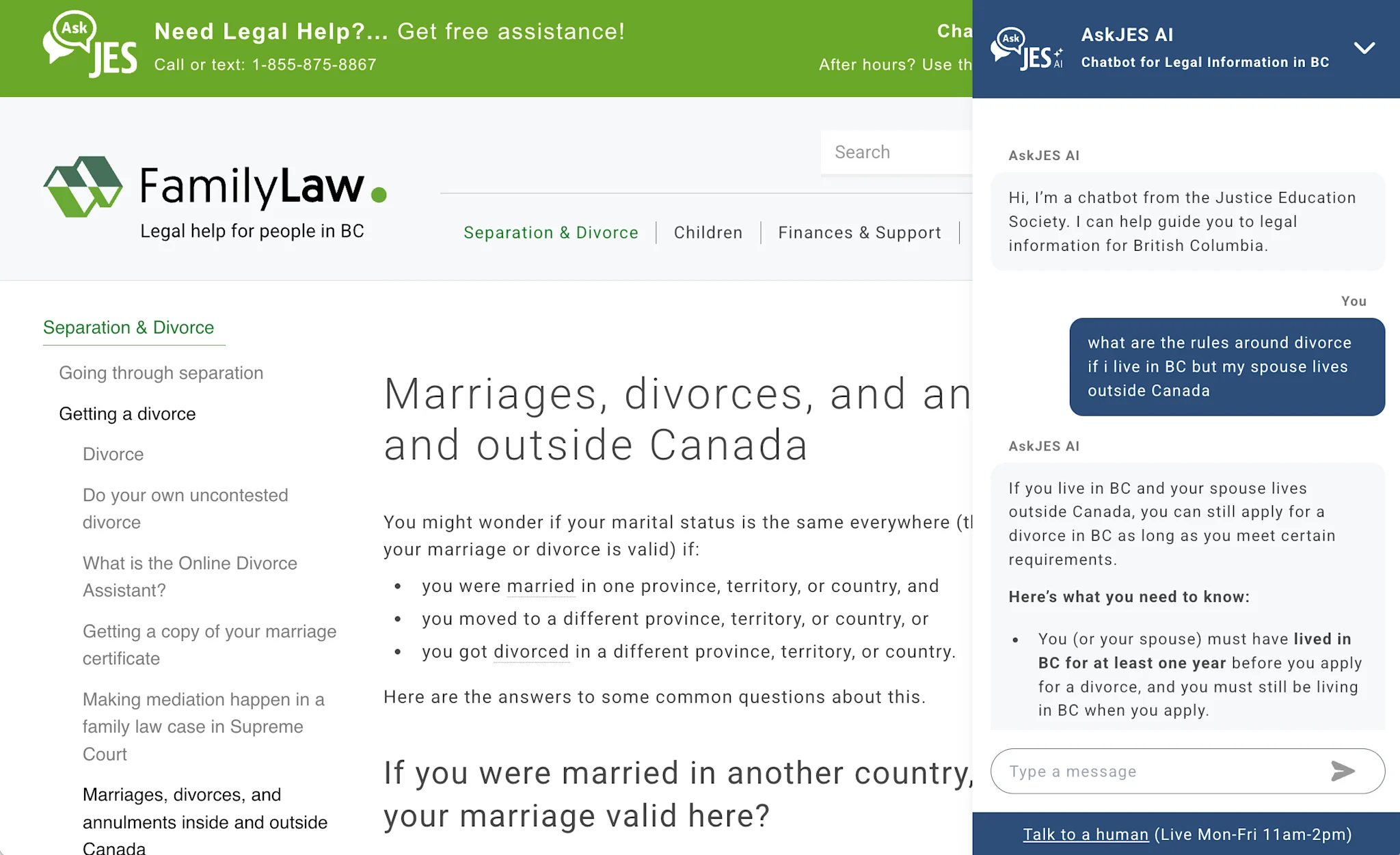

AskJES AI provides answers grounded in the legally-reviewed information on FamilyLawInBC

The solution

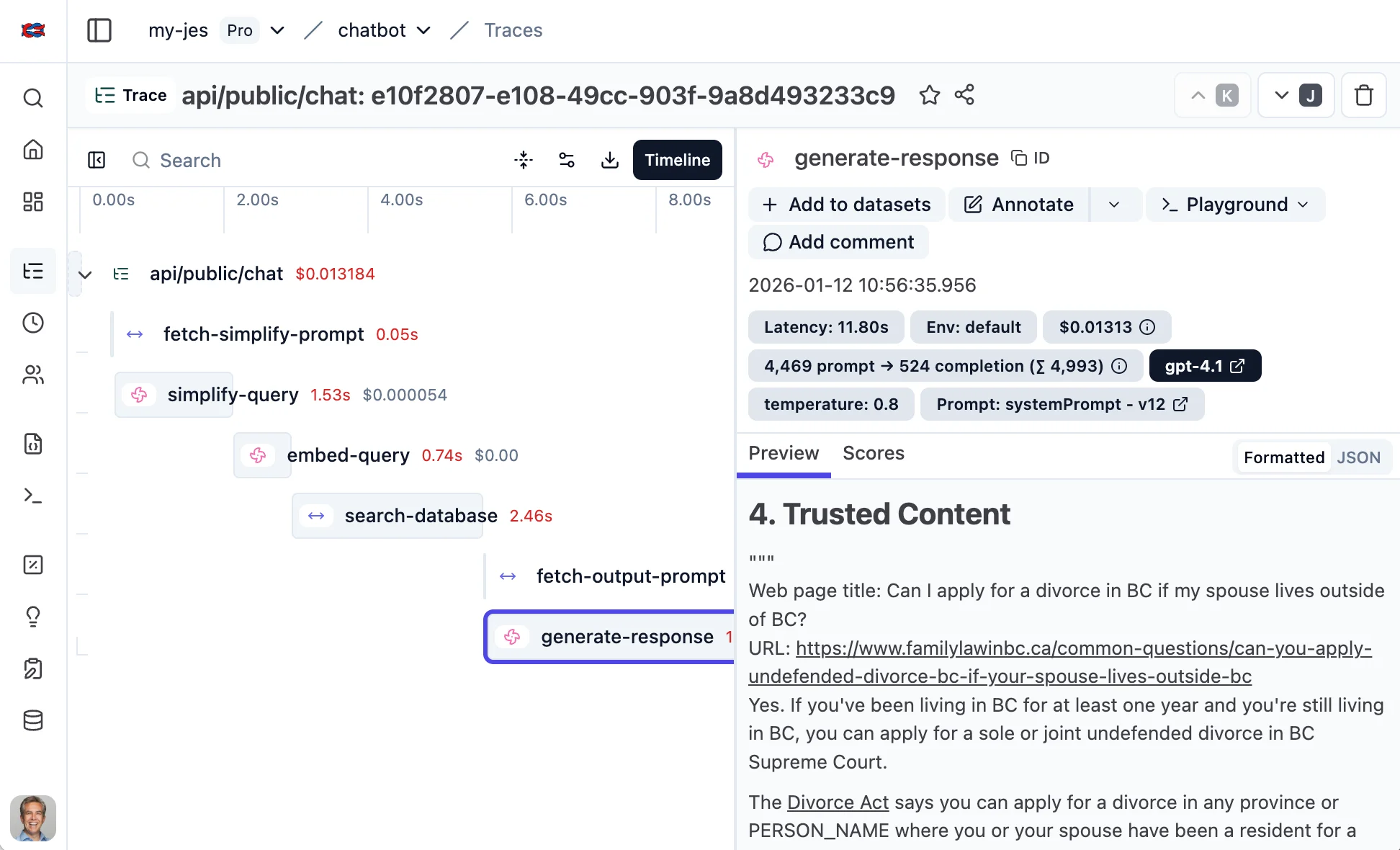

Tangowork built a Retrieval-Augmented Generation (RAG) system that scrapes and rebuilds its knowledge base nightly from legally reviewed information on FamilyLawInBC.ca. When a user asks a question, the system retrieves only relevant, vetted content from the vector database and instructs the Large Language Model to generate an answer based solely on that data. We integrated Langfuse for deep observability, allowing JES to trace the exact logic and website content behind every AI response.

An administration console logs conversations and helps JES understand exactly how the chatbot arrived at its answer. It also tracks latency and cost.

The results

Scaled availability: Transformed a limited-hours service into a 24/7/365 resource, removing capacity constraints for families in crisis.

Optimized human expertise: By automating responses to most inquiries, the AI frees up Ask JES staff to focus their limited time on complex, specific family law issues that require a human touch.

Content gap analysis: Through Langfuse observability, JES can now identify specific questions the AI refuses to answer, revealing exactly what content is missing from their website and allowing them to fill those gaps.

Zero-hallucination confidence: The system is engineered to refuse answers rather than guess, ensuring users only receive information firmly rooted in verified legal text.